Technology vs. Human Rights: Notes from the MIGS AI Forum

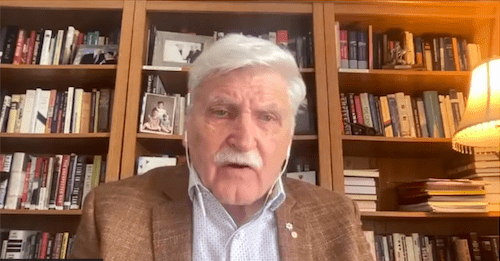

General Roméo Dallaire speaking on AI and human rights at the MIGS Forum

Kyle Matthews and Marie Lamensch

April 29, 2021

Montreal is recognized as a key dateline in the evolution of human rights protection, from McGill law professor John Humphrey’s work with Eleanor Roosevelt in the development of the Universal Declaration of Human Rights to the 2006 Declaration of Montreal on LGBTQ Human Rights. More recently, Montreal has also become a global hub of artificial intelligence expertise and innovation, most notably with the work of “father of AI” and Montreal Institute for Learning Algorithms founder Yoshua Bengio.

During her visit to Canada in 2019, United Nations High Commissioner for Human Rights Michelle Bachelet underscored that overlap in the city’s human rights and AI expertise when she traveled to Montreal to meet with technology executives, Canadian human rights scholars and activists. “I believe that our future — and the future of human rights — will depend enormously on the degree to which the technology community is able to embrace human rights principles into the very core of their work,” Bachelet said.

Inspired by that sentiment, the Montreal Institute for Genocide and Human Rights Studies at Concordia University hosted the Artificial Intelligence and Human Rights Forum this April 20-23. With the support of Global Affairs Canada, the Government of Quebec, the Embassy of the Kingdom of the Netherlands to Canada and the Canadian Commission to UNESCO, some of the world’s top minds gathered to discuss the intersection of this emerging technology and its impact on human rights and democratic values.

Two decades on from the dawn of the fourth industrial revolution, AI has already impacted human rights based on the regulatory and legal lag among democratic governments still catching up with technology and by equipping authoritarian surveillance states with unprecedented tools for the undetected monitoring and hacking of opponents, the obliteration of privacy rights and other human rights violations.

During the MIGS AI and Human Rights Forum, representatives from the United Nations, the Council of Europe, the Organization for Security and Cooperation in Europe, Stanford University, Harvard, University of Toronto, Amnesty International, UNESCO, among others, provided insight into many of the challenges AI technologies pose and offered concrete recommendations on steps that can be taken to ensure human rights are protected. The forum took place the same week the European Union unveiled its proposal for regulating AI and a week just before the U.S. Congress called on YouTube, Twitter and Facebook to explain how their algorithms operate. Likewise, the Canadian government is gearing up to regulate social media platforms in an effort to reduce online harms.

While its technological functions can seem opaque to non-experts, the current and potential impacts of AI on human rights are not theoretical. Governments, multilateral organizations, NGOs and academics are struggling to come up with better public policy proposals to respond to the impact of AI on society.

Here are five takeaways from the forum:

- The debate between those who believe that AI is a “weaponized propaganda machine” that amplifies misinformation online and those who argue that AI can be used to counter disinformation will continue. The COVID-19 pandemic has only accelerated the pace of disinformation and hate speech online, and while AI can indeed be used to take down or counter some of the AI-enabled computational propaganda and disinformation, using AI to fight AI is insufficient, and we will still require humans to act as content moderators.

- AI systems are increasingly being used to enable digital authoritarianism, threatening the liberal international order and democratic values. An increasing number of autocratic regimes use AI to repress human rights at home and abroad, and to realign the geopolitical world order. While China and Russia export their tools to like-minded regimes in South America and Africa, AI technologies developed in the West are also sometimes sold to autocrats with nefarious intentions. Worryingly, the use of surveillance tech by and within democratic states contributes to the normalization of digital authoritarianism. Liberal democracies are at a crossroads: the rise of digital authoritarianism is contributing to the global decline of democracy. There is an urgent need for stronger alliances of like-minded states and actors, such as Canada, the United States and European allies, to counter the harmful deployment of these technologies, both in democracies and autocracies.

- There is a clear need for regulation of AI and increased transparency. A hands-off approach to technology companies, combined with a lack of national and international regulations of AI, means that Big Tech companies and AI developers currently have the upper hand. States can revert this power asymmetry by enacting policies that will keep tech giants in check, while at the same time protecting fundamental freedoms such as privacy and freedom of expression. As the European Union’s recent proposal for regulation of AI shows, there is growing consensus on the need for legal frameworks, including here in Canada. The EU’s attempt will certainly serve as a laboratory for governments, elected officials, the private sector, and civil society groups to build on. The future will be defined by our capacity to work together to ensure AI technologies are developed with human rights as a priority, not an afterthought.

- Despite all the fears about AI, it can be used for social good and finding solutions to real-world problems. Moving beyond issues such as ethics and governance, AI technologies can be put in practice to empower citizens, respond to the needs of communities, and solve global challenges. For example, AI can be put to work to deliver some of the 17 goals and 169 targets recognized by the Sustainable Development Goals, including combating climate change, improving decision-making and good governance, while helping reduce poverty. AI technologies are being used to shine light on human rights abuses. Documentary filmmaker David France used AI technologies to chronicle the persecution of LGBTQ people in Chechnya, while protecting the identities of those who wish to speak out and flee this part of Russia. All technologies can have dual purposes: when protecting human rights and solving global problems are the main goal, AI can be used in innovative ways.

- Global cooperation and norm-setting are urgently required. As a transformational technology, AI has and will continue to have a wide-ranging impact on human rights worldwide. This requires Canada, the United States, and other democratic states to play a role in ensuring the responsible manufacturing and deployment of AI, including by developing global norms. For AI to work for the advancement of human rights across regions and societies, civil society, parliamentarians, tech companies, and multilateral organizations must be part of the process.

As a world leader in AI, Canada has an integral role to play in ensuring that these technologies are designed and applied for the protection and advancement of human rights. The government of Canada is already well-engaged in efforts to build a rules-based digital sphere that can mitigate the threats brought by AI. As Foreign Affairs Minister Marc Garneau stated at the opening of the MIGS AI Forum, “We urgently need a democratic vision for the new digital age. Canada and other liberal democracies have a role to play in guiding this vision.” This includes Canada’s work as a member of the Freedom Online Coalition, as well as the creation of the Global Partnership on Artificial Intelligence and the Centre of Expertise in Montréal for the Advancement of AI. The Canadian government, parliamentarians, academic institutions, the private sector and civil society must continue these efforts and contribute the United Nations Secretary General’s roadmap for digital cooperation. The AI and Human Rights Forum will act as an important platform for these discussions and exchanges to continue well into the future.

You can view all the virtual panels from the MIGS Artificial Intelligence and Human Rights Forum, including participation from General Roméo Dallaire, Citizen Lab’s Ron Diebert, and others at the MIGS YoutUbe channel.

Kyle Matthews is Executive Director of the Montreal Institute for Genocide and Human Rights Studies at Concordia University. Marie Lamensch is the institute’s Project Coordinator.