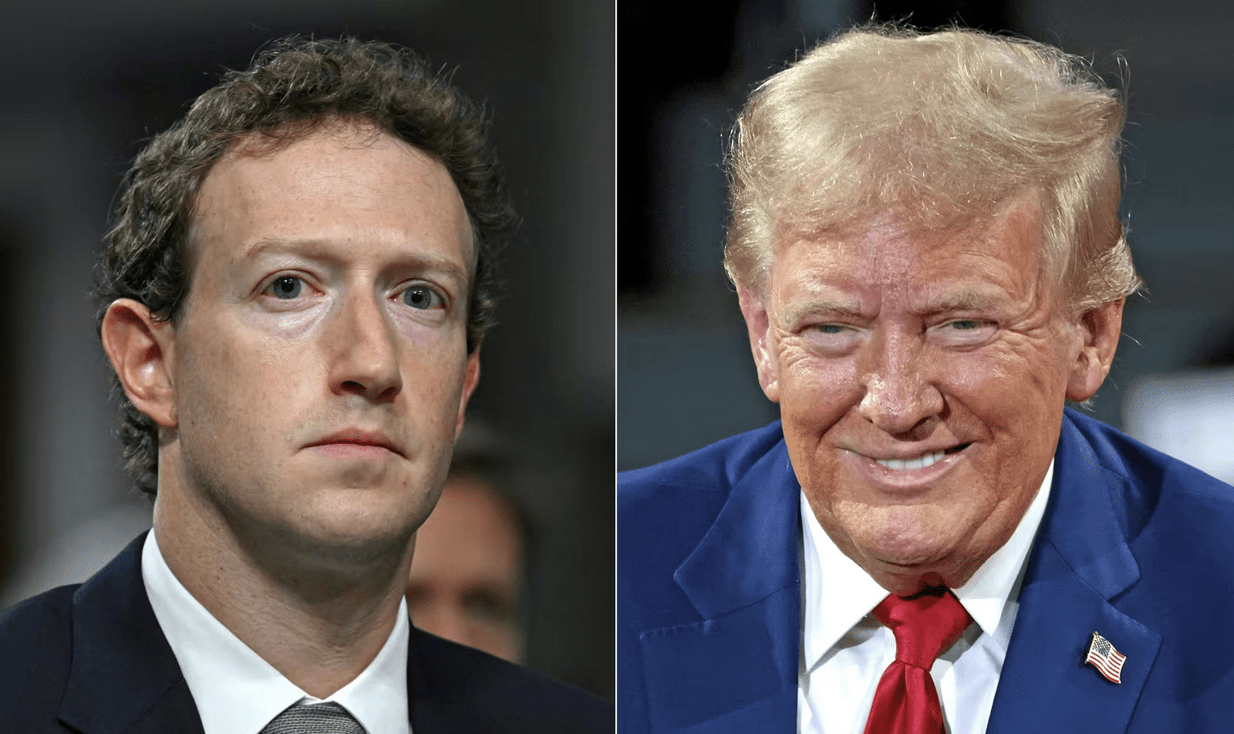

When Meta Met MAGA

AFP

AFP

By Aftab Ahmed

January 22, 2025

With his recent decision to axe Meta’s professional fact-checkers, Mark Zuckerberg has made the truth much harder to discern and online harms more difficult to prevent.

Zuckerberg has flung Meta headfirst into a political controversy, tactically syncing the company’s strategic direction with the free speech preferences of Donald Trump. Meta’s sweeping policy changes have been framed as a move to reduce social media censorship but, as the New York Times points out, the latest changes were catalyzed by Trump’s victory in November. “That month, Zuckerberg flew to Florida to meet with Mr. Trump at Mar-a-Lago,” per the Times on January 10th. “Meta later donated $1 million to the president-elect’s inaugural fund.”

Changes include the dismantling of professional fact-checking, the loosening of restrictions on divisive content, and a transition from a hands-on, institution-led content moderation model to a hands-off, user-driven laissez-faire system. Perhaps most politically pertinent as a sign of the shift in power in Washington, Meta has also suspended its work on diversity, equity, and inclusion (DEI), eliminated its chief diversity officer role, and said it will no longer prioritize minority-owned businesses in contracting.

Big Tech has long been urged to serve as the first line of defence in constraining a chaotic information ecosystem. From a corporate social responsibility lens, Meta has, to date, fallen short of delivering to the extent necessary.

From a business standpoint, the emerging narrative is one of calculated risk-taking, evedently aimed at appeasing Trump, the tech oligarchs in his orbit, and his political base. The fallout is likely to be anything but orderly.

First, there is likely to be a deluge of misinformation and other online harms, adding to an already overwhelming flood of untruth and anti-truth. Disarming moderation guardrails will turn Meta’s platforms into even more fertile breeding ground for reality-obfuscating conspiracy theories, manipulative propaganda, and other negative social externalities.

Second, Meta’s reliance on a decentralized, crowdsourced community-notes system — where users flag problematic posts — will result in significant delays in tackling harmful content. Since this model depends on user-driven triggers before any review begins, harmful posts will spread widely before corrective action is taken, if it happens at all.

Third, Meta’s timing lays bare a calculated effort to reset ties with Trump. Zuckerberg’s repeated attempts to cosy-up to political power to advance Meta’s interests are part of an alarming pattern in recent years, in which Big Tech tycoons have been trading off the common good to secure tangible influence over public policymaking and regulation.

Fourth, relaxing restrictions on polarizing topics will deepen ideological echo chambers, further locking users into spaces where they consume and share information based on pre-existing beliefs. Users will have little incentive to understand opposing viewpoints, thereby worsening tensions on issues like immigration and gender.

Disarming moderation guardrails will turn Meta’s platforms into even more fertile breeding ground for reality-obfuscating conspiracy theories, manipulative propaganda, and other negative social externalities.

Finally, Meta risks empowering authoritarian regimes by providing carte blanche for state-sponsored propaganda to flourish and for the weaponization of the digital sphere to worsen. The Rohingya crisis serves as an important reference point: unregulated platforms, mainly Facebook, played host to stoking ethnic violence in Myanmar, culminating in what the United States classified as genocide and crimes against humanity.

Meta’s dominance in the digital economy is a textbook case of monopoly power. With Facebook, Messenger, Instagram, Threads and WhatsApp commanding over three billion active monthly users worldwide, Meta faces no competition capable of challenging its influence. That monopoly, when exercised in aid of a particular ideology or regime, especially one with a proven anti-democracy agenda, essentially gives it the power of a conventional intelligence entity without the oversight.

Digital platforms benefit from network effects: the more users a platform has, the more valuable it becomes, and the harder it is for competitors to gain traction. This allows Meta to enact controversial policies with little risk of mass user defection. Even disillusioned users are left with few realistic alternatives in a market where Meta has consolidated its grip on communication and information-sharing.

And, by slashing professional fact-checking costs and shifting oversight to algorithms and users, it can cut expenses while maintaining engagement — a key metric for its $131 billion advertising empire. Polarization, while toxic to social cohesion, is a profitable business model.

Inflammatory and emotionally charged content generates higher engagement, a dynamic well-documented in a 2018 study, which found that fake news travels far faster than truth online. The economic incentive for Meta is straightforward: allow provocative content to flourish, boost user interaction, and watch advertising revenue climb.

Meta’s abandonment of specialized fact-checkers also zooms in on its monopsony power: when a dominant buyer — in this case, Meta — exerts outsized influence over its suppliers, in this instance, the labour force responsible for content oversight. Content moderation is an expensive, gruelling job, outsourced to low-paid contractors who sift through disturbing material under psychologically taxing conditions.

A 2019 investigation revealed the mental health toll on these workers, many of whom reported PTSD-like symptoms. Calls for better working conditions and higher wages were predictable, and Meta has decided to respond by minimizing its dependence on human labour altogether. Economically, this ticks all the boxes of what constitutes efficiency. Socially, it is reckless.

Bottom line: Meta is leaving society exposed to an unfiltered surplus of harmful content. Over time, this threatens to reshape the very fabric of democratic discourse, blurring the line between fact and falsehood, and redefining how humanity understands or seeks to understand truth in a polarized world.

Without institution-led editorial oversight or gatekeeping designed to prevent real-world harm, Meta risks becoming the worst version of itself: a global accelerant for public harm arising from social media.

Policy Contributing Writer Aftab Ahmed graduated with a Master of Public Policy degree from the Max Bell School at McGill University. He is a columnist for the Bangladeshi newspapers The Daily Star and Dhaka Tribune. He is currently a Policy Development Officer with the City of Toronto.

The views expressed in this article are personal opinions and do not reflect the views or opinions of any organization, institution, or entity associated with the author.